We are pleased to inform that we have a new publication in the 43rd European Conference on Information Retrieval (ECIR 2021). The publication presents a complete conversational search pipeline that includes various state-of-the-art approaches to rewrite queries, re-rank results, and summarize retrieved answers.

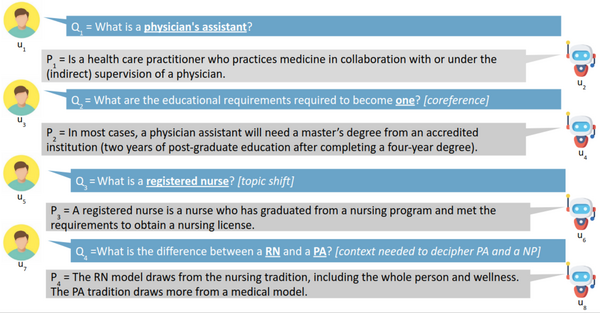

Conversational search is an information-seeking method that uses natural language in which the user asks questions to the system and after each one receives an answer, being capable of chaining multiple questions about a topic in a conversational way.

This makes the concept of conversational search a step forward when compared to simple keywords search, being the system’s responsibility to interpret the question, and most importantly the context where the question is posed.

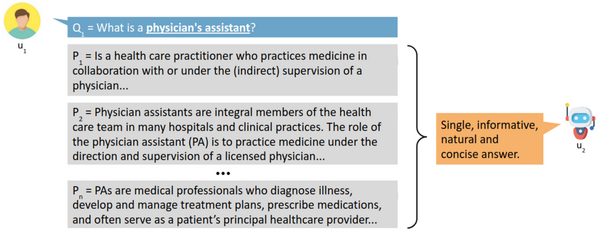

With the proposed system, we aim to allow for a more natural interaction with an intelligent agent via a conversational search paradigm while also reducing the burden on the user through a process of results summarization by including in a single answer information from several sources.

The results of our work achieved state-of-the-art results when compared to the baselines submitted to TREC CAsT 2019.

Title: Open-Domain Conversational Search Assistant with Transformers

Authors: Rafael Ferreira, Mariana Leite, David Semedo, and Joao Magalhaes

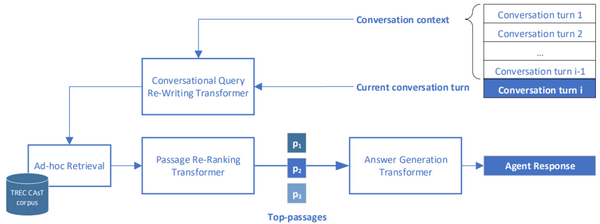

Abstract: “Open-domain conversational search assistants aim at answering user questions about open topics in a conversational manner. In this paper, we show how the Transformer architecture achieves state-of-the-art results in key IR tasks, leveraging the creation of conversational assistants that engage in open-domain conversational search with single, yet informative, answers. In particular, we propose an open-domain abstractive conversational search agent pipeline to address two major challenges: first, conversation context-aware search and second, abstractive search-answers generation. To address the first challenge, the conversation context is modeled with a query rewriting method that unfolds the context of the conversation up to a specific moment to search for the correct answers. These answers are then passed to a Transformer-based re-ranker to further improve retrieval performance. The second challenge, is tackled with recent Abstractive Transformer architectures to generate a digest of the top most relevant passages. Experiments show that Transformers deliver a solid performance across all tasks in conversational search, outperforming the best TREC CAsT 2019 baseline”.

The published paper is available at Springer with open-access link is available at arxiv.

Examples of the summarization capabilities can be seen in: https://knowledge-answer-generation.herokuapp.com/#